Paying for care can be stressful.

At a moment when patients are most vulnerable, they must navigate a complex payment process. The stress is further compounded when the bill exceeds their ability to pay–even with a payment plan.

But, what if the bill can be lowered to fit an individual’s financial circumstances while ensuring the provider gets paid for services rendered?

At Cedar, we’ve found that if providers offer discounts to patients when they are notified about their bill (vs. when they call in), it increases the likelihood of patients resolving their bills without decreasing the total amount paid to the provider. This is a win-win for both parties.

Our data shows that discounts work best when patients have the option to apply the discount to a payment plan, bringing their monthly payment to a manageable (and customized) level. But how can we determine which patients should get discounts? We’ve learned there are a variety of factors that influence a patient’s likelihood of payment and that it’s a complex problem—one for which machine learning (ML) is particularly well-suited.

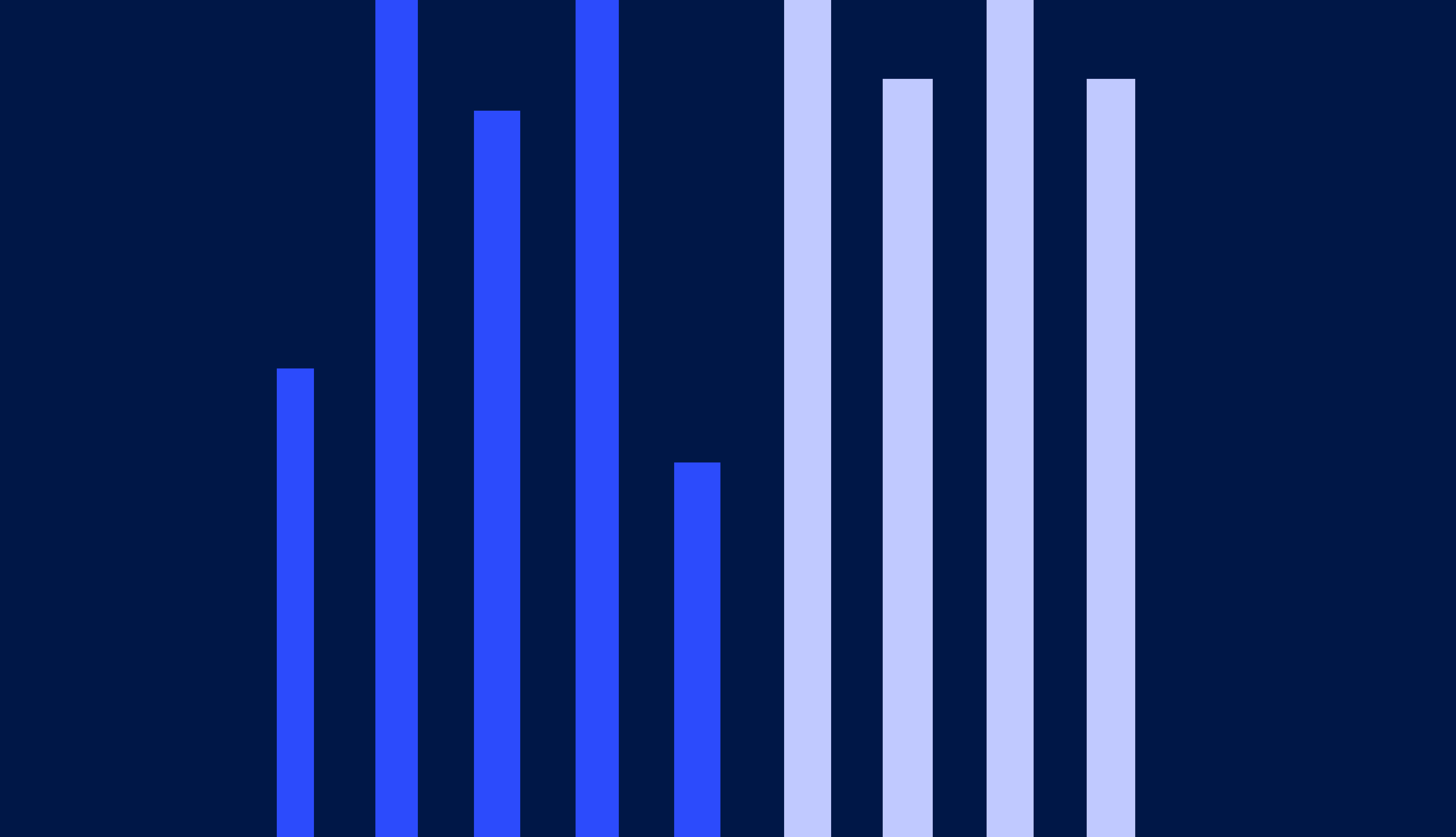

When we began our work on discounting, we knew that it would be important to help the patients who needed it most, as discussed in our previous article. We ultimately developed an ML solution that created a collections lift by identifying which are the cases where discounts should be given. With our ML solution, we give significantly more discounts to patients with larger bills– around 86% of bills that are $800 or greater are given discounts, while only 12% of bills less than $800 are given discounts. We also see that patients who have an estimated income of less than $50,000 (based on the median income for their zip code) are more likely to get a discount than patients who have an estimated income greater than $50,000. Through our analysis of who is receiving a discount, we can confirm that our ML model is fair, equitable, and making a difference where it matters most.

In this article, I discuss how we approached this challenge to drive toward our mission of improving the affordability of healthcare through ML-powered discounts, and what we learned along the way.

A machine learning model made possible through years of experimentation

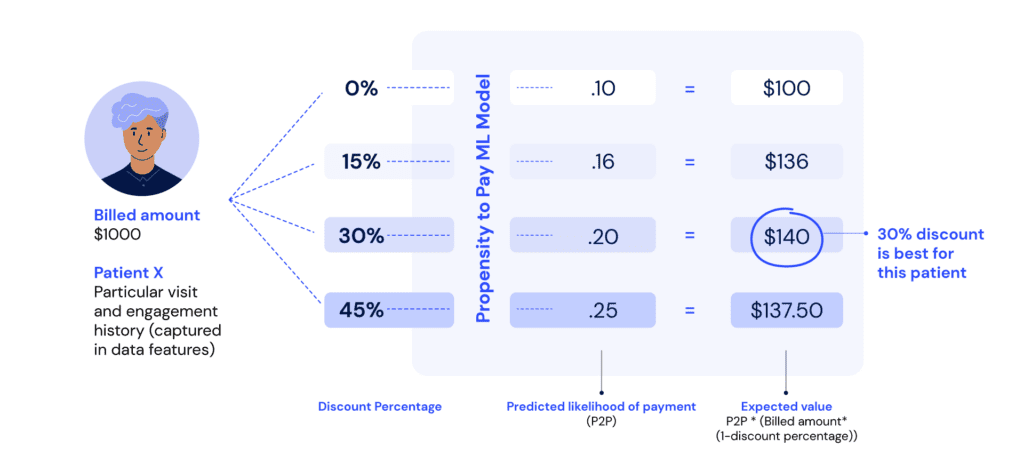

Over the past few years, we have been collecting data on how discounts can impact payment outcomes. In 2020, we began an experiment where we randomly assigned patients to receive a 15%, 30%, 45%, or no discount. Using this experimental data as the training set, we built a propensity-to-pay ML model that could be used to predict the likelihood that a particular patient would pay their bill, given that they received a particular discount size. By comparing the expected value of collections between discount options, we could select the discount option that was right for that patient.

We launched our ML model in early 2022, and excitedly waited for results to come in— it took some time because the outcomes are on 120-day cycles. The outcome metric we were measuring is collection rate: the amount collected out of the total original (pre-discount) amount billed.

When we had enough data to evaluate the outcomes, we discovered that the group of patients allocated towards the ML model had the lowest collection rate compared to the patient groups that were uniformly given the same discount (or lack of discount). Upon seeing the results, we were disappointed—but not defeated! ML systems are highly complex and can take multiple iterations to get right. Our ML team sprung into action to understand why our model underperformed. By carefully examining different aspects of the system, we made three key improvements that we hypothesized would lead to improved ML performance.

1. Improving the training data

Those who have experience with ML models are most likely familiar with the following concept: the quality of output produced by an algorithm is heavily dependent on the quality of the input data provided to it. This was one important area to investigate: was our training data up to par?

We had built data aggregation pipelines (on a de-identified basis) that reconstructed the details about each patient and the bill they received at a past moment in time (the exact point at which they received the discount). However, upon closer inspection, we saw the data included information about events that happened after that moment. This is a common error with ML models, known as “data leakage.” We revised our feature calculation logic to ensure that the training data was an accurate representation of the point in time it was intended to capture and that the inputs exactly matched between training data and “inference” data (where inference represents the moment when the ML model makes a new prediction).

We also studied the attributes that were being used as input to the propensity-to-pay model. There were 44 inputs in total, many of which related to the patient’s bill, their visits to the provider, the presence of digital contact methods, and their history of payments. However, our offline model evaluation showed us that we could achieve strong model performance using just 14 inputs (a combination of some original inputs, plus new ones that we engineered). By removing the unneeded inputs, we reduced the model’s tendency to memorize what it sees in the training data (a concept known as overfitting), and instead focus on the more generalizable patterns.

2. Incorporating long-term outcomes

When a patient receives a bill, they have 120 days to make a payment or start a payment plan. Therefore, the target variable for our ML model was payment within these 120 days. But as we dug deeper into the historical data we had collected, we saw something interesting: discounts influence collections beyond the first 120 days, because payment plans on smaller bills are more likely to be completed. When we discount a bill, people are less likely to cancel the payment plan that they start, making the discount even more effective in the long run. We had not incorporated this phenomenon into the first version of our model, so the ML system was rarely giving discounts. Once we added this adjustment, our model started giving many more discounts than before.

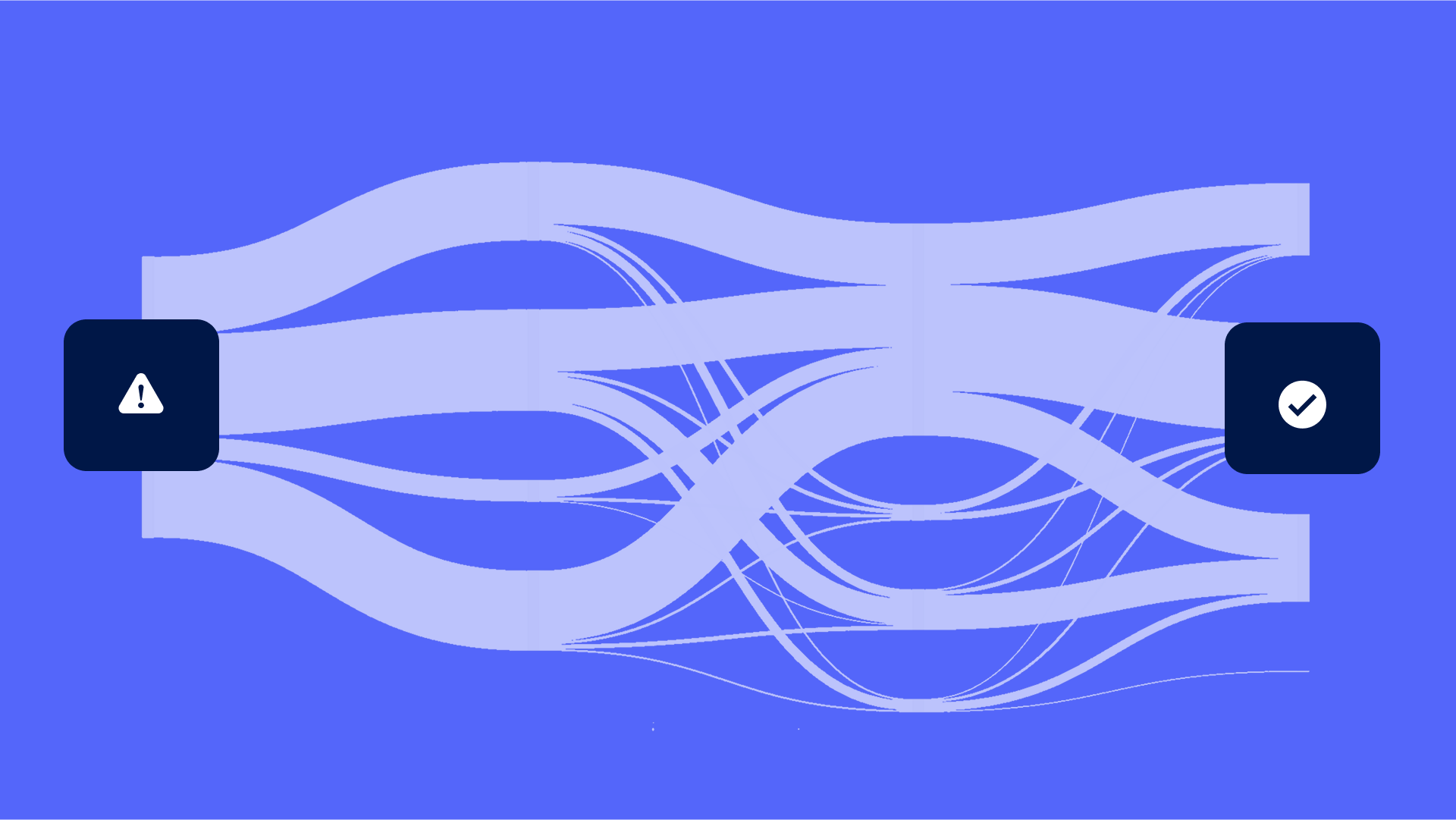

3. Reconsidering the system architecture

When we first launched our discount experiment, it was set up such that each patient was assigned to a discount group—either no discount, 15%, 30%, or 45%—and then remained in that group for all future bills that they may receive from the provider. This worked well initially, but when we added the ML group to the experiment, there was an issue: the ML model was only being exposed to first-time patients because those with previous visits had already been assigned to a discount group. This was problematic because the ML model performed best on patients where we had more history. As a result, we restructured our experiment so that patients were kept in the same discount group throughout the course of a billing cycle, and when a billing cycle ended and a new one began, they were randomly allocated to a new group. This ensured that the ML model could do what it does best: make accurate predictions in situations when it is provided with more information.

The moment of truth

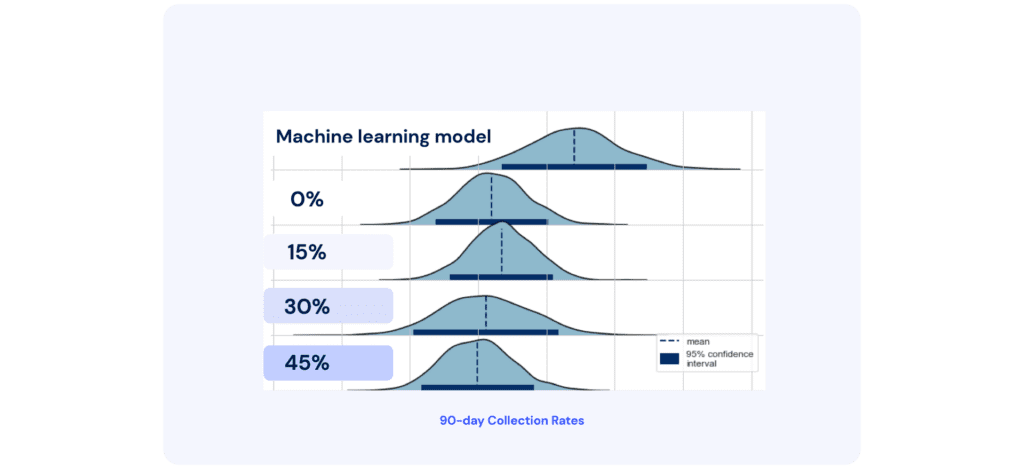

We launched our updated ML model late last year, and we are now starting to see some early results. Although not enough patients have reached the 120-day mark yet (the point at which we would make a definitive evaluation of the outcome), we can do an early evaluation at the 90-day mark. To analyze the results, we use a technique called bootstrapping, which enables us to compare the collection rate between different discount groups.

From the 90-day results, we observed that the ML model is outperforming all other discount groups, creating a nearly 10% relative lift in collection rate! This is huge because it shows that by offering discounts to patients who need them, providers can attain meaningful financial benefits, creating a win-win for patients and providers. As discussed, it is important to ensure that our model is giving discounts to patient populations who are struggling the most. Using our dedicated infrastructure for ML monitoring, we will closely examine model behavior as we continue to iterate and further improve on this work.

As part of our next steps, we will work to expand our ML discounting feature to other providers that have a large uninsured patient population. By helping these patients, we can continue to make great strides toward our mission of making healthcare more affordable and accessible.

Stay up to date on the latest patient financial experience insights by signing up for our newsletter below.

Sumayah Rahman is a Director of Data Science (Machine Learning Engineering and Data Science Infrastructure) at Cedar